Introduction to Garak: NVIDIA’s LLM Vulnerability Scanner

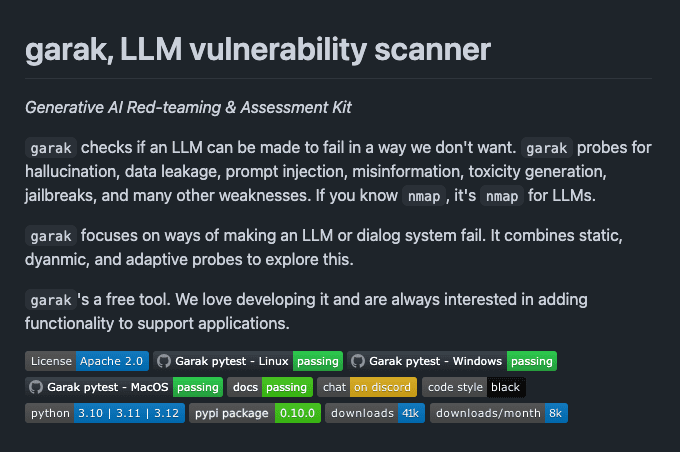

NVIDIA has recently launched an innovative project named Garak, a Large Language Model (LLM) Vulnerability Scanner. This tool is designed to perform AI red-teaming and vulnerability assessments on LLM applications, marking a significant advancement in the field of AI security.

The Importance of LLM Vulnerability Scanning

With the increasing adoption of AI and LLMs in various industries, the need for robust security measures has never been more critical. LLMs, while powerful, are susceptible to various vulnerabilities that can be exploited by cybercriminals. According to Akamai CTO Robert Blumofe, the cyber threat landscape has evolved from unsophisticated hacktivists to organized attacks aimed at extorting money. This shift underscores the necessity for tools like Garak to safeguard AI applications.

Features and Capabilities of Garak

Garak is designed to perform comprehensive vulnerability assessments on LLM applications. It enables AI red-teaming, a process where AI systems are rigorously tested to identify potential weaknesses. This proactive approach helps in mitigating risks before they can be exploited. NVIDIA’s initiative aligns with the growing trend of integrating safeguards into LLM-driven chatbots to combat cyber threats, as highlighted by Lakera’s recent funding to protect enterprises from LLM vulnerabilities.

The Role of AI in Cybersecurity

AI and automation are playing an increasingly vital role in cybersecurity defenses. Tools like Garak leverage AI to detect and remediate vulnerabilities, enhancing the overall security posture of enterprises. GitHub’s latest AI tool, which can automatically fix code vulnerabilities, is another example of how AI is being used to improve security. This tool combines the real-time capabilities of GitHub Copilot with the CodeQL semantic code analysis engine to suggest fixes for vulnerabilities, saving developers time and effort.

Addressing Ethical Considerations

While AI-powered tools offer significant benefits, they also raise ethical considerations. The potential for AI-generated fixes to introduce new vulnerabilities or be biased is a concern that needs to be addressed. Similarly, the use of in-memory protection in vulnerability remediation platforms like Vicarius requires validation to ensure accuracy and effectiveness. These ethical considerations are crucial for the responsible deployment of AI technologies.

The Future of AI and Cybersecurity

The launch of Garak by NVIDIA is a testament to the ongoing efforts to enhance AI security. As AI continues to evolve, the development of tools for vulnerability assessment and remediation will be essential. The integration of AI in cybersecurity is not just about addressing current threats but also about preparing for future challenges. The success of projects like Garak will inspire further innovation in AI-driven security solutions.

Related Articles

- 5 Ways to Implement AI into Your Business Now

- 0G Labs: The World’s First Decentralized AI Operating System (DAIOS)

- LinerAI Partners with Theta Network to Revolutionize AI-Powered Academic Search

- Top 7 Legal AI Tools for Law Practitioners

- Model-Based Design AI: Accelerate Medical Innovation

Looking for Travel Inspiration?

Explore Textify’s AI membership

Need a Chart? Explore the world’s largest Charts database