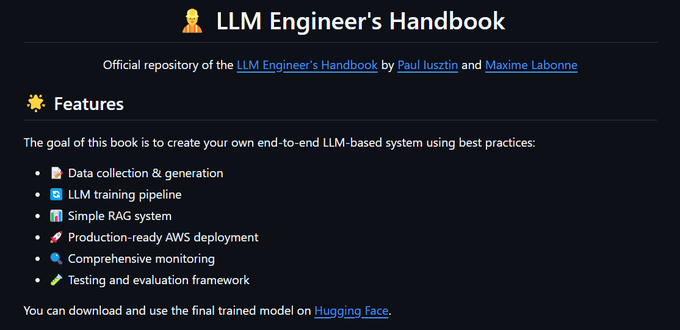

Introduction to the LLM Engineer’s Handbook

The LLM Engineer’s Handbook has recently been updated and is now sitting at an impressive 1.6k stars on GitHub. This comprehensive resource is highly recommended for researchers and developers working with large language models (LLMs). The handbook provides detailed insights into LLMOps pipelines, which are crucial for training, deploying, and monitoring LLMs effectively.

LLMOps Pipelines: Train, Deploy, and Monitor

The LLMOps pipelines outlined in the handbook are essential for managing the lifecycle of LLMs. These pipelines include training and deploying models with AWS, monitoring them with Opik, and running them with ZenML. These tools provide a robust framework for handling the complexities of LLM operations.

Training and Deploying with AWS

AWS offers a scalable and reliable platform for training and deploying LLMs. The handbook provides step-by-step instructions on setting up your environment, optimizing training processes, and deploying models to production.

Monitoring with Opik

Opik is a powerful tool for monitoring the performance and health of your LLMs. It provides real-time insights and alerts, helping you ensure that your models are running smoothly and efficiently.

Running with ZenML

ZenML is an open-source framework that simplifies the process of running LLMs. It integrates seamlessly with other tools and platforms, making it easier to manage and scale your AI projects.

Meta’s Llama: A Case Study in Open Generative AI

Meta’s Llama models are a prime example of the growing importance of open generative AI models. These models are available on multiple cloud platforms and allow developers to download and use them with certain limitations. The open-source nature of Llama models promotes transparency and community-driven development, contrasting with closed-source models from competitors like OpenAI.

Meta Llama: Everything you need to know about the open generative AI model

The Impact of Open-Source AI Models

The open sourcing of AI models like Meta’s Llama has significant implications for the AI ecosystem. It democratizes access to advanced AI technology, enabling broader innovation and development, particularly in developing countries. This approach can drive efficiencies, enhance customer experiences, and support data-driven decision-making across various industries.

Meta’s open source push: Llama’s global impact and India’s AI role

Tools and Platforms for AI Development

Several tools and platforms are available to support AI development, including Databricks’ Mosaic AI, GitHub Copilot, and Amazon’s Bedrock and SageMaker. These tools offer a range of features for building, deploying, and managing AI applications, with a focus on generative AI and integration with data lakes and governance platforms.

Databricks expands Mosaic AI to help enterprises build with LLMs

OpenAI o1 “Strawberry” Finally Available on GitHub Copilot Chat with VS Code Integration

Good old-fashioned AI remains viable in spite of the rise of LLMs

Related Articles

- Dive into Machine Learning with 920 Open Source Projects

- Leveraging Bicep Templates for Azure Automation with Maester

- How AI is Transforming the Way Developers Work with GitHub Copilot

- Unlocking the World of Data Science: Free eBooks for Aspiring Data Scientists

- Unveiling Character AI: The Frontier of AI Roleplay in Education

Looking for Travel Inspiration?

Explore Textify’s AI membership

Need a Chart? Explore the world’s largest Charts database