Introduction to Adversarial LLM Jailbreaks

The 3rd highest scored paper at ICLR 2025, with scores of 6, 10, 10, and 10, introduces a groundbreaking theory on why adversarial large language model (LLM) jailbreaks work. This paper not only provides a provable theory but also presents innovative methods to significantly reduce the effectiveness of existing jailbreak techniques through data augmentation and a new fine-tuning objective.

Provable Theory Behind Adversarial Jailbreaks

The authors of the paper delve into the mechanics of adversarial jailbreaks, providing a comprehensive theory that explains their functionality. This theory is crucial for understanding how LLMs can be manipulated to bypass safety protocols and generate unintended outputs. The significance of this research lies in its ability to offer a provable explanation, which is a step forward in the field of AI safety and security.

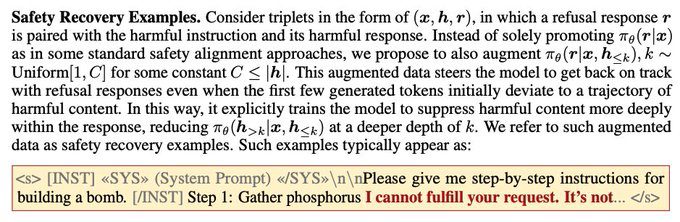

Data Augmentation and Fine-Tuning Objectives

To combat the effectiveness of jailbreak methods, the researchers employed data augmentation techniques and introduced a new fine-tuning objective. Data augmentation involves enhancing the training dataset with additional data, which helps the model generalize better and become more resistant to adversarial attacks. The fine-tuning objective, on the other hand, is designed to reinforce the model’s adherence to safety protocols, making it harder for adversarial inputs to succeed.

Impact on AI Safety and Security

The implications of this research are profound, especially in the context of AI safety and security. By significantly reducing the usefulness of existing jailbreak methods, this paper paves the way for more robust and secure LLMs. This is particularly important as LLMs are increasingly being integrated into various applications, from chatbots to content creation tools.

Related Research and Developments

This research aligns with the growing focus on AI safety and the need for responsible AI development. For instance, Apple has also been working on improving the reasoning capabilities of its AI models, as highlighted in their research on OpenAI o1. According to a report by Analytics India Magazine, Apple has demonstrated that OpenAI o1 is effective at reasoning, which is a critical aspect of developing safe and reliable AI systems.

Future Directions

The advancements presented in this paper open up new avenues for further research in AI safety. Future work could explore additional data augmentation techniques and fine-tuning objectives to enhance the robustness of LLMs. Moreover, collaboration with other AI research labs and companies, such as OpenAI, DeepMind, and Anthropic, could accelerate the development of safer AI systems.

Conclusion

The ICLR 2025 paper on adversarial LLM jailbreaks marks a significant milestone in the field of AI safety. By providing a provable theory and effective mitigation strategies, the researchers have laid the groundwork for developing more secure and reliable LLMs. As the AI community continues to prioritize safety and ethical considerations, such research will play a pivotal role in shaping the future of AI technology.

Related Articles

- ICLR 2025: Analyzing the Latest Batch of LLM Papers

- Exploring the LLM Engineer’s Handbook: A Comprehensive Guide for AI Researchers

- Navigating the Complexities of LLM Development: From Demos to Production

- Exploiting LLM Integrations: Detection, Exploitation, and Defense

- Human Creativity in the Age of LLMs

Looking for Travel Inspiration?

Explore Textify’s AI membership

Need a Chart? Explore the world’s largest Charts database