Optimizing Large Language Models: The Power of Pruning

The field of artificial intelligence (AI) continues to evolve at a rapid pace, with large language models (LLMs) at the forefront of this revolution. Recently, a new study has demonstrated remarkable advancements in optimizing LLMs, specifically Meta’s Llama 3.1 8B model. By employing a technique known as pruning, researchers have achieved a 98% accuracy recovery on key benchmarks while removing 50% of the model’s parameters. This breakthrough not only enhances the model’s efficiency but also significantly reduces its computational requirements.

Pruning involves strategically removing unnecessary connections in a neural network, thereby making the model smaller and faster. This technique has been around for some time, but achieving good results and maintaining performance across various tasks has always been challenging. The recent study, however, has shown that with the right approach, pruning can yield impressive outcomes.

Key Achievements and Metrics

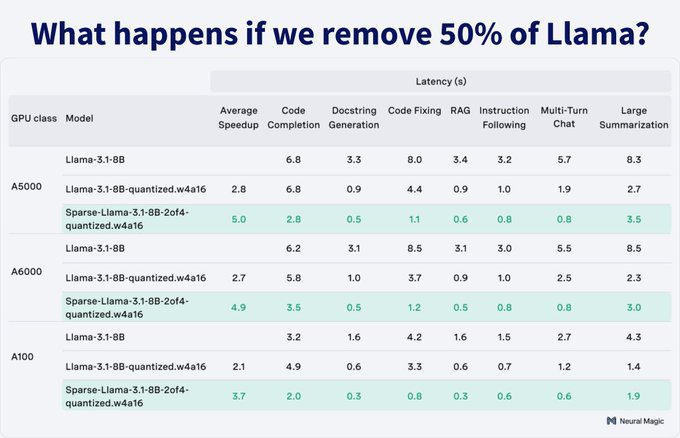

The study’s findings are nothing short of impressive. Here are some of the key metrics achieved through pruning and quantization:

- 98.4% original accuracy on the Open LLM Leaderboard v1 with 50% fewer parameters using a 2:4 sparsity pattern.

- 30% higher throughput and 1.8x lower latency, with up to 5.0x improvement when combined with quantization.

- Compatibility with 4-bit quantization (GPTQ) and Sparse-Marlin kernels.

- Full recovery on fine-tuning tasks such as GSM8K, Evol-CodeAlpaca, and Ultrachat-200K.

- 1.4-2.1x better multi-query throughput.

- Pruned using 13B tokens training, completed in 26 hours on 32 H100s.

- Optimized for NVIDIA Ampere GPUs and newer.

Combining Pruning with Quantization

While pruning alone has shown significant improvements, combining it with quantization takes the optimization to the next level. Quantization involves reducing the precision of the model’s weights, which further enhances its efficiency without compromising accuracy. The study demonstrated that using 4-bit quantization (GPTQ) in conjunction with pruning resulted in substantial performance gains.

Implications for AI and Machine Learning

The implications of these advancements are far-reaching. By making LLMs more efficient and accessible, researchers and developers can deploy these models in a wider range of applications. From natural language processing and text generation to translation and summarization, the possibilities are endless. Moreover, the reduced computational requirements mean that these models can be run on less powerful hardware, making advanced AI technology more accessible to a broader audience.

Future Directions and Challenges

Despite the significant progress made, there are still challenges to overcome. One of the main hurdles is ensuring that the optimized models maintain their performance across a diverse set of tasks. Additionally, while pruning and quantization have shown great promise, further research is needed to refine these techniques and explore new optimization methods.

Related Articles

- Exploring the Inner Workings of Large Language Models (LLMs)

- 5 Ways to Implement AI into Your Business Now

- Human Creativity in the Age of LLMs

- The Adaptation Odyssey: Challenges in Fine-Tuning Large Language Models (LLMs)

- Navigating the Complexities of LLM Development: From Demos to Production

},

Looking for Travel Inspiration?

Explore Textify’s AI membership

Need a Chart? Explore the world’s largest Charts database