Imagine a World Where We Can Communicate with Animals

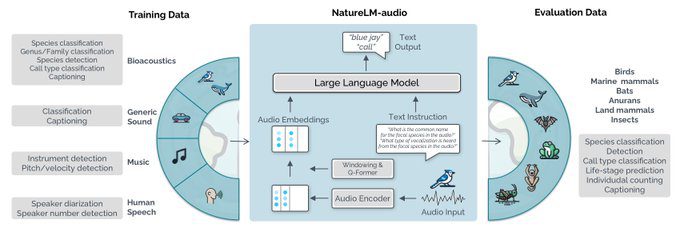

Since the dawn of time, humanity has been fascinated by the idea of communicating with animals. This concept has eluded us for centuries, but with the advent of Large Language Models (LLMs) and their multi-modal translation abilities, this dream might finally be within reach. The first step towards this goal is the development of the first bioacoustics foundation model by the Earth Species Project (ESP). This groundbreaking model aims to bridge the communication gap between humans and animals by leveraging advanced AI technologies.

Deciphering Alien Languages with AI

The potential of AI in deciphering unknown languages is not limited to animals. According to an article from the Economic Times, AI simulations could be used to observe how human languages may have evolved. This approach is similar to how archaeologists interpret ancient languages from ancient scripts. Future alien communications could be interpreted by observing and creating intelligent language decoding systems using AI. This research highlights the growing interest in AI language development and its broader applications. For more details, you can read the full article on deciphering alien language.

AI and Neurotechnology: Restoring Lost Voices

In another fascinating development, AI has been used to restore the voice of individuals who have lost their ability to speak due to conditions like ALS. Doctors at the University of California, Davis, surgically implanted electrodes in a patient’s brain to try to discern what he was trying to say. This research, supported by companies like Neuralink, aims to connect people’s brains to computers, potentially restoring their lost faculties. This innovation in neurotechnology has a high potential impact on speech-impaired individuals. For more information, you can read the full article on AI retrieving lost voices.

Real-Time Translation at Live Events

Mixhalo, a live event audio streaming platform, has introduced a new feature that uses AI to beam real-time translation to phones at events. This feature combines ultra-low latency audio streaming with AI-generated translations, offering real-time multilingual experiences at live events. This innovation has the potential to disrupt traditional interpretation services and improve accessibility at multilingual events. For more details, you can read the full article on AI-powered real-time translation.

Generating Soundtracks and Dialogue with AI

DeepMind has developed a new AI technology called V2A (Video-to-Audio) that generates soundtracks for videos, including music, sound effects, and dialogue. This technology claims to be unique in understanding raw video pixels and syncing generated sounds automatically. However, DeepMind has decided not to release this technology to the public anytime soon to prevent misuse. This innovation could potentially automate sound design and displace jobs in the film and music industries. For more information, you can read the full article on AI generating soundtracks and dialogue.

Open-Source Voice Assistants

The Large-scale Artificial Intelligence Open Network (LAION) is working on an open-source AI-powered voice assistant called BUD-E. This voice assistant focuses on natural conversation, emotional intelligence, and extensibility for integration with other apps and services. This development aims to influence the creation of open-source voice assistants and promote privacy-focused alternatives. For more details, you can read the full article on open-source voice assistants.

Related Articles

- 5 Ways to Implement AI into Your Business Now

- Exploring the Inner Workings of Large Language Models (LLMs)

- Human Creativity in the Age of LLMs

- Creating Your Own Local LLM Chatbot on a Raspberry Pi

- The Power of PAAL Technology in AI and Automation

Looking for Travel Inspiration?

Explore Textify’s AI membership

Need a Chart? Explore the world’s largest Charts database