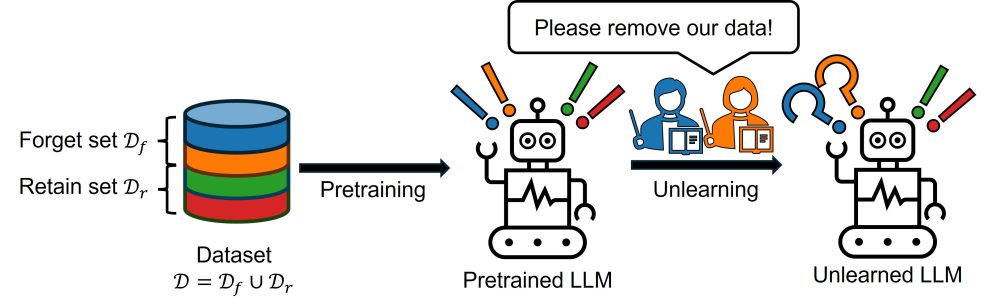

The concept of “unlearning” in artificial intelligence (AI) has gained significant attention as AI models are increasingly used to process sensitive or personal data. Unlearning refers to the process of making AI models forget specific data they were trained on—particularly important for adhering to data privacy regulations or removing sensitive information. However, recent research challenges the effectiveness of current unlearning techniques and raises important questions about their impact on model performance.

The Role of Quantization in AI Unlearning

A recent paper titled “An Embarrassingly Simple Approach to Recover Unlearned Knowledge” sheds new light on this issue. While not yet peer-reviewed, the study suggests that applying a technique called quantization to models that have undergone unlearning can unintentionally restore the forgotten knowledge. Quantization is a method that reduces the precision of a model’s parameters, typically to save computational resources. However, in this context, researchers discovered that the loss of precision through quantization could actually revive up to 83% of the information the model was meant to forget, even though the unlearning process initially sought to erase it.

Specifically, when the model underwent 4-bit quantization, the retention of “forgotten” information significantly increased. In contrast, the unlearned model retained only 21% of the intended forgotten knowledge in full precision. This finding challenges the assumption that unlearning methods can effectively and permanently erase specific knowledge from AI models.

Unlearning and Its Impact on Model Performance

Understanding the broader context of unlearning is key to grasping its challenges. As reported by TechCrunch, large language models (LLMs) like GPT-4 are trained on vast datasets that include publicly available information from websites, books, and news articles. This raises concerns related to copyright, data privacy, and the ethical use of information, which has driven the need for unlearning techniques.

Current unlearning methods typically involve algorithms designed to steer models away from specific pieces of data that need to be forgotten. This approach aims to make the model less likely to output the unlearned data. However, unlearning is not as simple as pressing a “delete” button. The process involves complex trade-offs, where making a model forget certain data can often lead to a decline in overall performance, reducing the model’s ability to answer questions accurately or make predictions.

Evaluating Unlearning Effectiveness: The MUSE Benchmark

To evaluate the success of unlearning algorithms, researchers have developed a framework called MUSE (Machine Unlearning Six-way Evaluation). MUSE tests a model’s ability to forget specific pieces of information while retaining related, general knowledge. For instance, a successful unlearning algorithm should cause a model to forget the plot of a specific book, like one from the Harry Potter series, while still retaining general knowledge about literature or fantasy themes.

However, the study found that while unlearning algorithms can successfully remove certain data from the model’s memory, the resulting model typically performs worse overall. These algorithms tended to degrade the model’s general question-answering capabilities, highlighting a significant challenge in designing effective unlearning methods.

The Role of Quantization in Restoring Forgotten Knowledge

The most recent research discovering the role of quantization adds another layer of complexity to this issue. While quantization typically reduces model precision to improve computational efficiency, it seems that this process can unintentionally restore some of the knowledge that was supposed to be forgotten. This finding challenges the assumption that unlearning is a one-way process, suggesting that the intricacies of AI memory are more complex than previously thought.

For AI practitioners and researchers, this discovery underscores the need for careful evaluation of unlearning methods. It is essential to design these methods in a way that ensures the model forgets the specific information required without causing significant degradation to the model’s overall functionality or utility. This is particularly important as unlearning methods are increasingly seen as essential for compliance with data privacy laws like the GDPR (General Data Protection Regulation) in Europe or similar regulations globally.

Implications for AI’s Future and Data Privacy

The growing concern around data privacy, intellectual property, and ethical AI development means that unlearning techniques will become more important as AI systems are deployed in more sensitive contexts. Whether for removing personal information or addressing copyright concerns, unlearning techniques will play a crucial role in shaping responsible AI deployment.

The discovery that quantization can restore unlearned knowledge is a crucial reminder of how delicate and complex the AI training process can be. As AI models become more capable, it is clear that the task of making AI forget something is not as straightforward as it might seem.

Conclusion

In conclusion, the concept of unlearning in AI is a critical but challenging issue. While AI models must be capable of forgetting specific data to comply with privacy regulations and ethical guidelines, the process of unlearning is far from simple. Techniques like quantization introduce new challenges, potentially restoring “forgotten” knowledge and complicating the task of designing truly forgetful AI systems. As AI continues to evolve, researchers must remain vigilant in addressing these complexities to ensure that AI technologies can be both effective and ethically compliant.

For those interested in diving deeper into this topic, you can explore the paper on arXiv and access the code on GitHub. Additional articles on TechCrunch and Neuroscience News offer a broader perspective on the implications of unlearning in AI.

Ready to Transform Your Hotel Experience? Schedule a free demo today

Explore Textify’s AI membership

Explore latest trends with NewsGenie