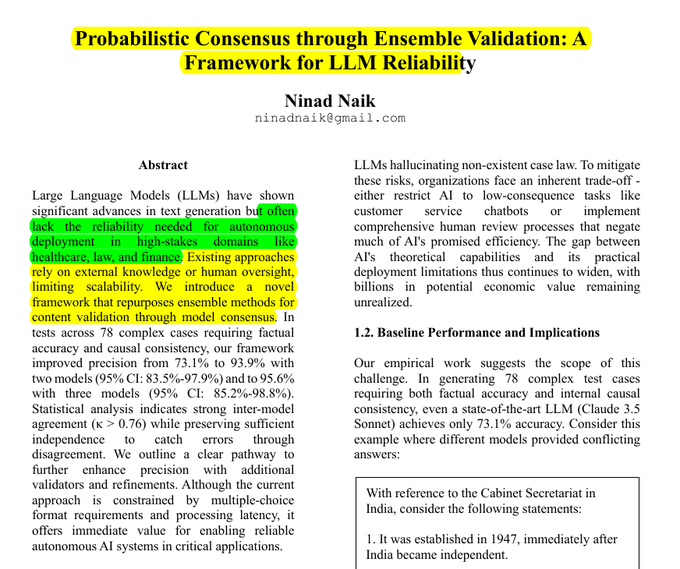

The Challenge of AI Reliability in Critical Domains

Large Language Models (LLMs) have shown remarkable capabilities in generating human-like text and performing complex tasks. However, the AI reliability remains a significant concern, particularly for autonomous deployment in critical domains such as healthcare and finance. Even advanced LLMs achieve only 73.1% accuracy in complex tasks, making them too unreliable for high-stakes applications.

The Solution: Ensemble Methods for Content Validation

A recent paper proposes an innovative solution to enhance the reliability of LLMs through ensemble methods for content validation. This approach leverages the consensus of multiple models to catch each other’s mistakes, significantly improving accuracy. The study utilized three models: Claude 3.5 Sonnet, GPT-4o, and Llama 3.1 405B Instruct.

The content is presented as multiple-choice questions for standardized evaluation. Each model independently assesses the content and provides single-letter responses. The system requires complete agreement among validators for content approval, eliminating reliance on external knowledge sources or human oversight.

Key Insights from the Study

- Probabilistic consensus through multiple models is more effective than single-model validation.

- High but imperfect agreement levels (κ > 0.76) indicate an optimal balance between reliability and independence.

- The multiple-choice format is crucial for standardized evaluation and reliable consensus.

- The framework shows a conservative bias, prioritizing precision over recall.

- Error rates compound dramatically in multi-step reasoning processes.

Results and Impact

The results of the study are promising. In a two-model configuration, the system achieved 93.9% precision (95% CI: 83.5%-97.9%). With a three-model configuration, the precision increased to 95.6% (95% CI: 85.2%-98.8%). This approach reduced the error rate from 26.9% to 4.4% in baseline testing, making AI reliable enough for critical applications.

Applications and Future Prospects

The implications of this study are far-reaching. By improving the reliability of LLMs, ensemble validation can make AI systems viable for critical applications in healthcare, finance, and beyond. This approach aligns with the growing interest in AI safety and the need for responsible AI development.

For instance, LLMs can help home robots recover from errors without human help, showcasing the potential of AI to adapt and correct itself in real-time. Similarly, OpenAI’s o1 model demonstrates the importance of self-fact-checking capabilities in enhancing AI reliability.

Related Articles

- Human Creativity in the Age of LLMs

- Exploring the Inner Workings of Large Language Models (LLMs)

- Navigating the Complexities of LLM Development: From Demos to Production

- The Adaptation Odyssey: Challenges in Fine-Tuning Large Language Models (LLMs)

- Rethinking LLM Memorization

Looking for Travel Inspiration?

Explore Textify’s AI membership

Need a Chart? Explore the world’s largest Charts database