Understanding the Layers and Matrix Operations in LLMs

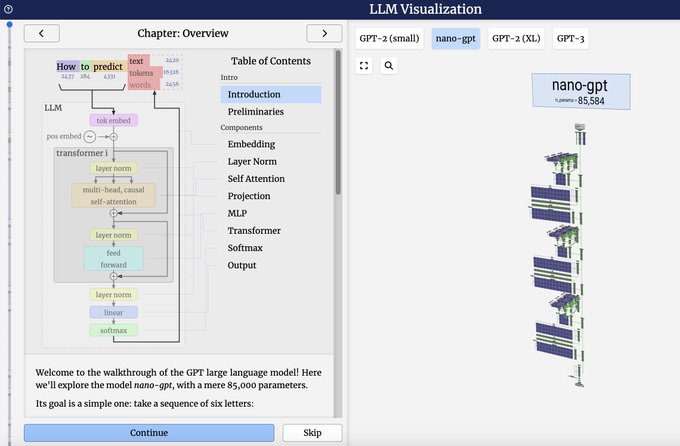

Large Language Models (LLMs) have revolutionized the field of artificial intelligence, enabling machines to understand and generate human-like text. These models, such as OpenAI’s ChatGPT, Google’s Gemini, and Meta’s Llama, are composed of multiple layers and complex matrix operations that allow them to process and generate text with remarkable accuracy. To delve deeper into the intricacies of LLMs, one can explore the detailed breakdown of their layers and matrix operations provided by experts like @santiviquez and @BrendanBycroft. For a comprehensive understanding, take a peek inside an LLM, including all its layers & matrix operations: https://tinyurl.com/mtvxu2nm.

Government Policies and Their Impact on AI Innovation

The Indian government’s recent requirement for explicit permission before deploying AI/LLMs on the Indian internet has sparked significant debate. This policy aims to ensure responsible AI development and combat misinformation but has also raised concerns about potential hindrances to innovation and growth, particularly for startups. The regulation could favor large corporations like Google, Meta, Microsoft, and OpenAI, which have more resources to navigate the compliance landscape. For more details, refer to the article on govt-missive-to-seek-nod-to-deploy-llms-to-hurt-small-companies-startups.

Innovations in Legal Technology with Large Language Models

Lexlegis AI has launched a generative AI querying model called Ask, designed to assist legal professionals with research, analysis, and drafting. This platform, which includes tools like Interact and Draft, is currently in beta testing and aims to streamline legal processes by providing precise answers and summarizing information efficiently. This innovation is set to significantly impact the legal technology sector, offering new tools for legal research and document drafting. For more information, visit lexlegis-ai-launches-llm-platform-to-help-with-legal-research-analysis.

LLMs in Business Intelligence

Fluent, an AI-powered natural language querying platform, is making business intelligence tools easier and faster to use. By enabling non-technical users to directly query business databases using natural language, Fluent eliminates the need for SQL expertise or complex dashboard creation. This democratization of data access is poised to disrupt the traditional business intelligence market, making data analysis more accessible and efficient. For more insights, read about Fluent’s approach on fluent.

Microsoft’s Breakthrough in LLM Inference

Microsoft has launched an inference framework called BitNet.cpp, capable of running a 100B BitNet b1.58 model on a single CPU. This framework achieves processing speeds comparable to human reading, significantly improving performance and reducing energy consumption. This breakthrough has the potential to disrupt traditional LLM inference processes by offering a more efficient and cost-effective solution. For further details, check out microsoft-launches-inference-framework-to-run-100b-1-bit-llms-on-local-devices.

On-Premise Deployment of LLMs

Giga ML is addressing data privacy and customization concerns for enterprises by offering a platform for deploying large language models on-premise. This approach provides customization, privacy, and cost-efficiency, making it an attractive alternative to cloud-based solutions. Giga ML’s focus on on-premise deployment is particularly relevant for industries like finance and healthcare, where data privacy is paramount. Learn more about Giga ML’s innovative solution on giga-ml-wants-to-help-companies-deploy-llms-offline.

Related Articles

- 5-ways-to-implement-ai-into-your-business-now

- the-future-of-data-science-and-machine-learning-with-abacusais-chatllm-ai-assistant

- linerai-partners-with-theta-network-to-revolutionize-ai-powered-academic-search

- lucaiai

- predicting-collective-dynamics-of-multicellular-systems-with-geometric-machine-learning

Looking for Travel Inspiration?

Explore Textify’s AI membership

Need a Chart? Explore the world’s largest Charts database