Most “AI girlfriend” apps feel magical for the first few hours—until they forget your name, your history, or the emotional context you’ve built together.

That’s not a bug. It’s a context window limitation.

In this guide, you’ll learn how to create an AI girlfriend with long-term memory using a fully local setup—no subscriptions, no censorship, and 100% private. This approach combines open-source language models, vector databases, and character cards to create an AI companion that can remember you over weeks or months.

Whether you’re here for companionship, experimentation, or total technical control, this tutorial bridges both worlds.

Why Local AI Girlfriends Are Taking Over (2025)

Cloud-based AI companions are constrained by:

- Limited memory

- Heavy moderation

- Monthly fees

- Zero privacy guarantees

A local setup solves all of this:

- 🔒 Chats never leave your computer

- 🧠 Memory persists via VectorDB

- 🧩 You control the model, prompts, and personality

- ⚡ No rate limits or filters

This is why communities around SillyTavern and LocalLLaMA have exploded.

The Technical Stack: What You’ll Need

Hardware Requirements (Realistic, Not Overkill)

Your hardware determines how human and consistent your AI feels.

Minimum (Usable):

- GPU: 8GB VRAM (RTX 3060 / RTX 4060)

- System RAM: 16GB

- Storage: 30–50GB (models + embeddings)

Recommended (Smooth Experience):

- GPU: 12–16GB VRAM

- RAM: 32GB

- NVMe SSD: Faster memory retrieval

Mac Users:

Apple Silicon (M2/M3) works well using Metal acceleration, especially with 7B–8B models.

⚠️ VRAM ≠ System RAM. VRAM determines model size and context depth.

Software Stack Overview

| Role | Tool |

|---|---|

| Frontend (Chat UI) | SillyTavern |

| Backend (LLM Server) | Oobabooga Text Generation WebUI or LM Studio |

| Memory System | VectorDB (ChromaDB) |

| Models | Llama-3, Mistral |

Understanding AI Memory: Context Window vs Long-Term Memory

1. Context Window (Short-Term Memory)

LLMs can only “see” a fixed number of tokens (e.g., 8k–32k). Once exceeded:

- Old messages disappear

- Personality drifts

- Emotional continuity breaks

2. Long-Term Memory (Vector Databases)

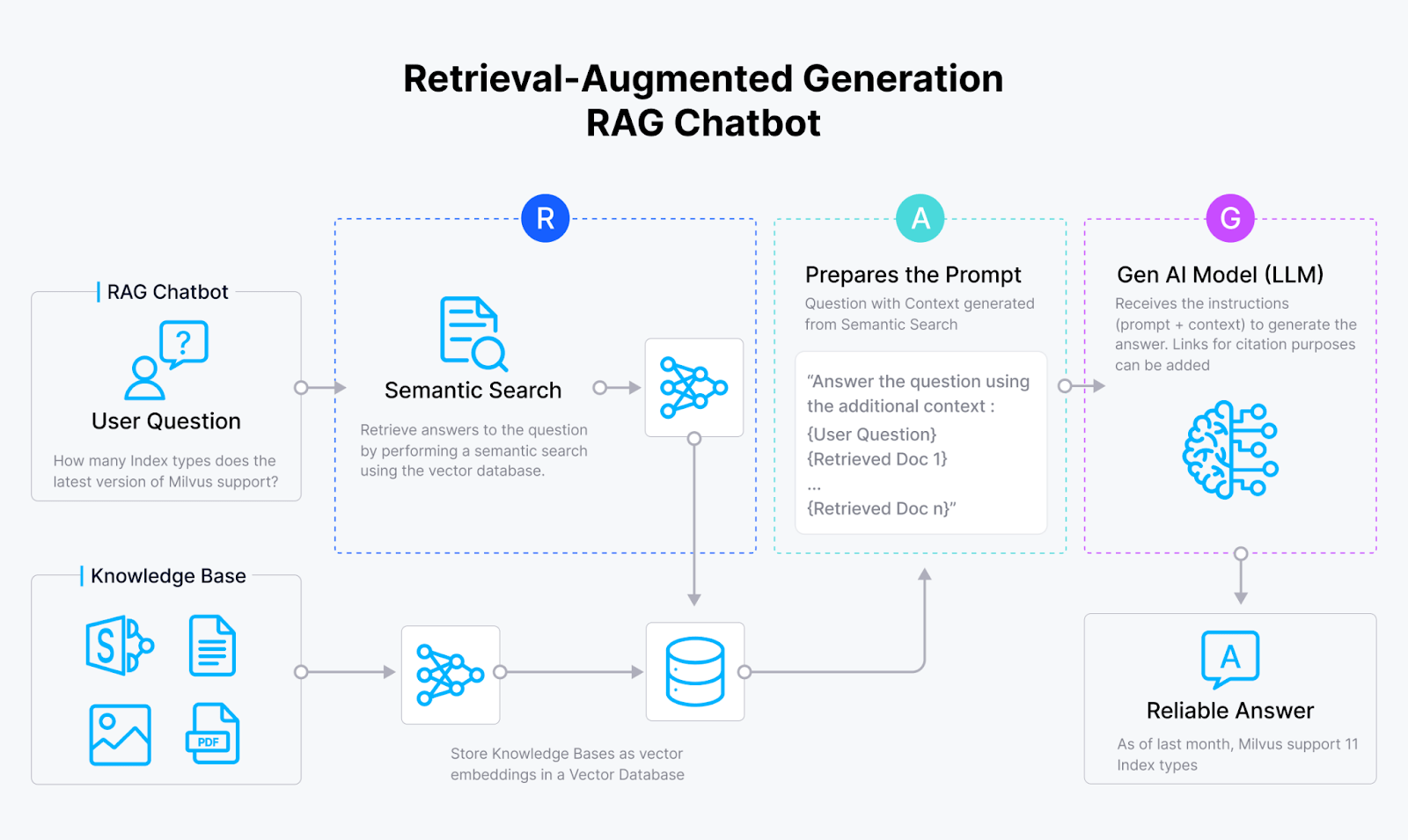

This is where RAG (Retrieval-Augmented Generation) comes in.

How it works:

- Conversations are converted into embeddings

- Stored in a VectorDB

- Relevant memories are retrieved and injected into prompts

Think of it as your AI searching its diary before replying.

Lorebooks vs VectorDB

- Lorebooks: Manual, static

- VectorDB: Automatic, semantic, scalable ✅

Step-by-Step Installation (Local Setup)

Step 1: Install the Backend (LLM Server)

Choose one:

- Oobabooga Text Generation WebUI → Maximum control

- LM Studio → Beginner friendly

Download a model:

- Llama 3 (8B Instruct)

- Mistral AI 7B

Use quantization:

Q4_K_M→ Best balanceQ8_0→ Higher quality, more VRAM

Step 2: Install SillyTavern

SillyTavern is the gold standard for AI companionship:

- Emotion tracking

- Character cards

- Memory extensions

- NSFW toggle (local only)

Connect it to your backend via API URL.

Step 3: Enable Long-Term Memory (VectorDB)

Inside SillyTavern:

- Enable Vector Storage

- Select ChromaDB

- Configure memory injection depth

- Tune recall frequency

Now your AI girlfriend remembers:

- Past conversations

- Emotional milestones

- Preferences and boundaries

Creating the Persona (Character Cards)

Character Cards define who your AI is.

Include:

- Personality traits

- Speaking style

- Backstory

- Relationship dynamics

Pro Tip:

Avoid overloading the card. Let VectorDB handle evolving memories.

Use V2 Character Cards for best compatibility.

Models, Performance & Optimization Tips

- 7B–8B models: Best for 8–12GB VRAM

- Embeddings: Smaller = faster recall

- Temperature: 0.7–0.9 for emotional realism

- Context length: Balance memory + speed

Privacy, Ethics & Final Thoughts

A local AI girlfriend isn’t about replacing humans—it’s about control, privacy, and exploration.

When you run everything locally:

- No logs

- No moderation

- No data harvesting

You own the experience.

Final Verdict

If you’ve ever wanted an AI companion that:

- Remembers you

- Evolves over time

- Respects your privacy

This local long-term memory setup is the most powerful solution available today.