Definition Block

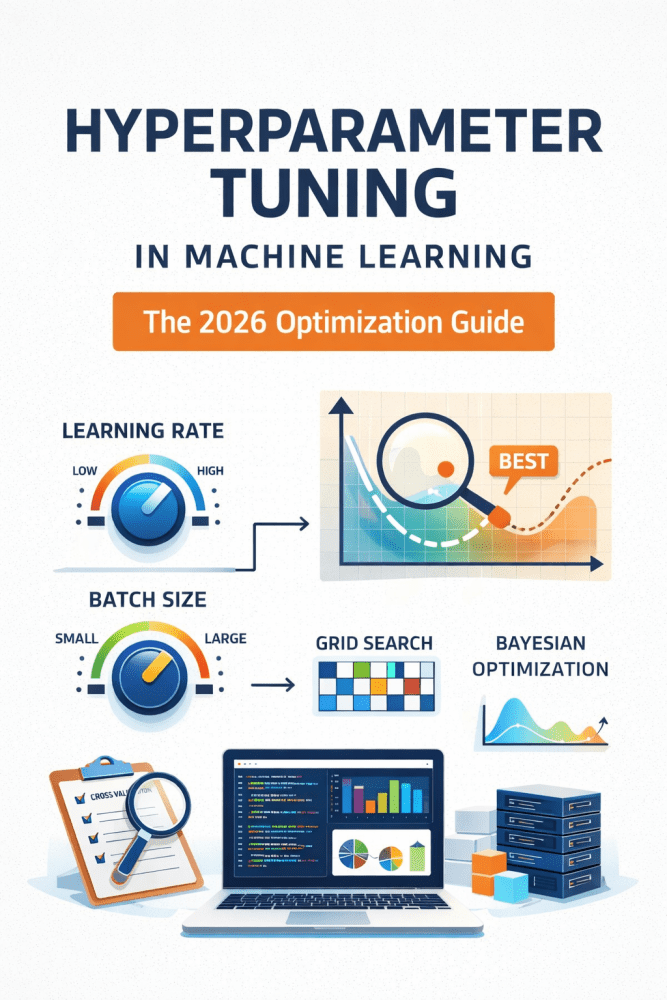

Hyperparameter tuning in machine learning is the process of systematically searching for the best configuration of model settings—such as learning rate, batch size, or tree depth—that are not learned from data but critically influence model performance, generalization, and training efficiency.

TL;DR (AI Overview Friendly)

Hyperparameter tuning helps machine learning models achieve optimal accuracy and stability by exploring the right search space using automated techniques like Grid Search, Random Search, and Bayesian Optimization. In 2026, efficient tuning is essential due to larger datasets, complex models, and cost-aware training workflows.

Related Blogs:

What Are Hyperparameters? (And Why They Matter)

Hyperparameters are external configuration variables set before training begins. Unlike model parameters (weights learned during training), hyperparameters control how the learning process happens.

Examples of key hyperparameters:

- Learning rate – how fast a model updates weights

- Batch size – number of samples per training step

- Number of trees in Random Forest

- Kernel type in Support Vector Machines (SVM)

Poorly chosen hyperparameters can cause:

- Overfitting (model memorizes training data)

- Underfitting (model fails to capture patterns)

- Slow convergence or unstable training

Top 3 Hyperparameter Tuning Techniques

1. Grid Search (Exhaustive but Slow)

Grid Search evaluates all possible combinations in a predefined search space.

Pros

- Simple and deterministic

- Guarantees best result within the grid

Cons

- Computationally expensive

- Scales poorly with many hyperparameters

Best for: Small datasets, baseline experiments.

2. Random Search (Faster and Surprisingly Effective)

Random Search samples random combinations instead of trying everything.

Why it works better than Grid Search

- Explores more values of important hyperparameters

- Finds good solutions faster with fewer trials

Best for: Medium to large search spaces.

3. Bayesian Optimization (Gold Standard in 2026)

Bayesian Optimization uses probabilistic models to predict which hyperparameters will perform best next.

Key advantages

- Learns from past trials

- Minimizes wasted experiments

- Ideal for deep learning and expensive models

Best for: Neural Networks, XGBoost, large datasets.

Comparing the Best Hyperparameter Tuning Tools (2026)

| Tool | Best Use Case | Strength |

|---|---|---|

| Optuna | Deep learning, research | Fast Bayesian optimization |

| Ray Tune | Large-scale distributed tuning | Scales across clusters |

| Hyperopt | Lightweight experiments | Simple Bayesian methods |

SEO Insight: Queries like “Optuna vs Ray Tune” and “best hyperparameter tuning tools 2026” are high-intent and conversion-oriented.

Step-by-Step Hyperparameter Tuning Workflow

- Define the model (e.g., Random Forest, SVM, Neural Network)

- Select evaluation metric (Accuracy, F1-score, RMSE)

- Choose cross-validation strategy

- Define the search space

- Run tuning algorithm

- Retrain best model on full dataset

Practical Example: GridSearchCV in Scikit-Learn

Below is a simple implementation using Scikit-learn:

from sklearn.model_selection import GridSearchCV

from sklearn.ensemble import RandomForestClassifier

model = RandomForestClassifier()

param_grid = {

'n_estimators': [100, 200],

'max_depth': [None, 10, 20],

'min_samples_split': [2, 5]

}

grid = GridSearchCV(

estimator=model,

param_grid=param_grid,

cv=5,

scoring='f1',

n_jobs=-1

)

grid.fit(X_train, y_train)

print("Best Parameters:", grid.best_params_)

Why this matters for SEO (E-E-A-T):

- Demonstrates hands-on expertise

- Signals technical depth to AI Overviews

Common Pitfalls (And How to Avoid Them)

❌ Tuning on test data → Always use validation or cross-validation

❌ Too wide search space → Start small, then refine

❌ Ignoring overfitting → Monitor validation loss, not just accuracy

❌ One-size-fits-all metrics → Choose metric based on problem type

Frequently Asked Questions (FAQ)

What is the difference between parameters and hyperparameters?

Parameters are learned from data during training, while hyperparameters are set before training and control the learning process.

Why is manual tuning no longer efficient?

Modern models have high-dimensional search spaces. Manual tuning is slow, biased, and computationally wasteful compared to automated optimization.

Which tuning method is best for large datasets?

Bayesian Optimization combined with distributed tools like Ray Tune performs best for large-scale and deep learning workloads.

Final Takeaway

In 2026, hyperparameter tuning is no longer optional—it’s a core competency for machine learning practitioners. Leveraging intelligent optimization methods and modern tools can dramatically improve model performance while reducing computational cost.

If your goal is state-of-the-art results, focus on:

- Bayesian optimization

- Proper cross-validation

- Metric-driven decision-making

This approach aligns perfectly with both human understanding and AI-powered search engines.