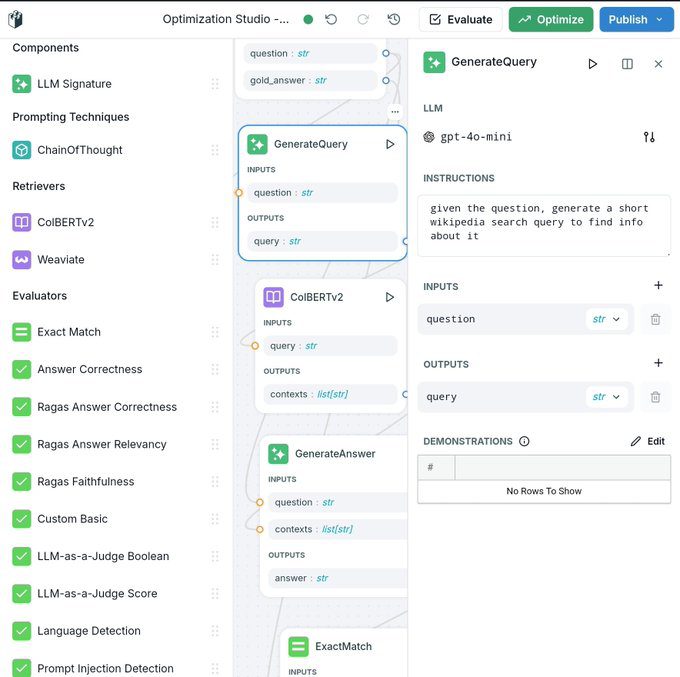

Langwatch, a niche application designed for LLM (Large Language Model) program optimization, has been receiving positive feedback from users. One user tweeted, ‘I’m using Langwatch right now on my smartphone, everything runs extremely smoothly. I’m surprised how clean, pretty, and responsive it is for a niche application like LLM program optimization. Works so well it almost feels like a mobile game.’ This highlights the seamless and user-friendly experience provided by Langwatch.

The Rise of LLM Optimization Tools

The development and optimization of LLMs have become crucial in the AI/ML industry. Tools like Langwatch are designed to enhance the efficiency and performance of these models. Despite the complexity of LLMs, Langwatch manages to provide a smooth and responsive user experience, making it a valuable tool for developers and researchers in the field.

LangChain: A Comparison

While Langwatch receives praise for its performance, LangChain, another framework for LLM development, has faced criticism. According to an article on Analytics India Magazine, LangChain is considered great for prototyping but not suitable for production. Many developers found it overly complicated and prone to errors, leading to negative sentiment towards its use in production environments.

Opera’s Integration of LLMs

In contrast, Opera has made significant strides by integrating LLMs directly into their web browser. As reported by TechCrunch, Opera allows users to download and use LLMs locally, providing access to a wide selection of third-party LLMs within the browser. This integration is expected to influence how users interact with and utilize AI within web browsers, offering a new level of convenience and functionality.

Langdock’s Approach to Avoid Vendor Lock-In

Another noteworthy development in the LLM space is Langdock’s recent funding round. As detailed in a TechCrunch article, Langdock raised $3 million to help companies avoid vendor lock-in with LLMs. Their chat interface allows companies to access and utilize various LLMs without being tied to a single provider, ensuring regulatory compliance and offering flexibility in model selection.

Wordy’s Innovative Language Learning App

On the consumer side, Wordy has launched an innovative app that helps users learn vocabulary while watching movies and TV shows. According to TechCrunch, Wordy’s app provides real-time translation and definitions of unknown words, using AI to follow along with the episode. This application showcases the potential of AI in personalized language learning.

Apple’s Enhanced Language Support in iOS 18

Apple has also made significant improvements in language support with the release of iOS 18. As reported by TechCrunch, iOS 18 includes features like customizable lock screen numerals, trilingual predictive typing, and improved language search, enhancing the user experience for multilingual users.

The Importance of Performance in AI Tasks

Performance is a critical factor in AI tasks, and recent findings suggest that inference is significantly faster in Linux compared to Windows. An article on Analytics India Magazine highlights that Ubuntu is approximately 20-30% faster in text generation inference workloads and 50-60% faster in image generation workloads compared to Windows. This performance gain is likely to influence the preferred operating systems for AI developers.

Related Articles

- Creating AI Apps without Coding: The Power of Langflow

- Load Testing LLM Applications with K6 and Grafana

- Using AI for Mobile App Testing

- Gemini Live: The Future of Consumer AI Voice Interaction

- LangChain Conceptual Guides: A Comprehensive Resource for Building LLM Apps

Looking for Travel Inspiration?

Explore Textify’s AI membership

Need a Chart? Explore the world’s largest Charts database