Understanding the Limitations of Large Language Models (LLMs)

Large Language Models (LLMs) like OpenAI’s GPT-4 and Google’s Bard have revolutionized the field of artificial intelligence, particularly in natural language processing. However, a recent study highlighted in a tweet by Apple reveals a significant limitation: LLMs do not think step-by-step in implicit reasoning. This finding challenges the perception that LLMs are on the path to achieving Artificial General Intelligence (AGI).

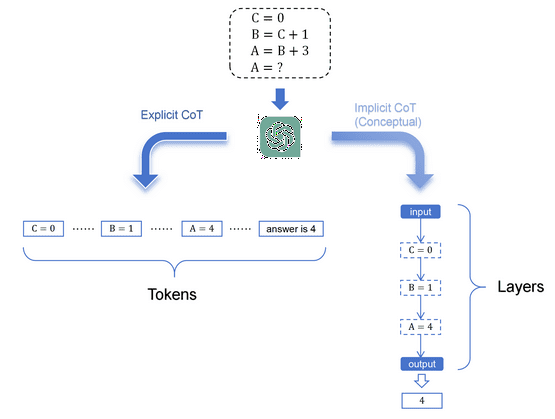

Apple’s research paper states, “current LLMs cannot perform genuine logical reasoning; they replicate reasoning steps from their training data.” This means that while LLMs can generate text that appears logical, they do not engage in the kind of step-by-step reasoning that humans do. Instead, they rely on patterns and examples from their vast training datasets.

Paras Chopra, a researcher involved in the study, emphasizes, “Reasoning is knowing an algorithm to solve a problem, not solving all of it in your head.” This distinction is crucial for understanding the capabilities and limitations of LLMs. Clem Delangue, another expert in the field, adds, “Once again, an AI system is not ‘thinking’, it’s ‘processing’, ‘running predictions’,… just like Google or computers do.”

Applications and Implications in Robotics and Automation

Despite these limitations, LLMs have shown promise in various applications, including robotics and automation. Researchers at MIT have developed a method for robots to self-correct errors using LLMs and imitation learning. This approach enables robots to recover from mistakes and adjust to environmental variations without requiring human intervention or reprogramming. By breaking down tasks into subtasks and utilizing LLMs for natural language understanding and replanning, robots can become more adaptable and reliable in complex, real-world environments.

According to MIT, “It turns out that robots are excellent mimics. But unless engineers also program them to adjust to every possible bump and nudge, robots don’t necessarily know how to handle these situations, short of starting their task from the top.” This development could significantly improve the reliability and adaptability of robots, making them more suitable for complex tasks and real-world environments.

For more details, you can read the full article on Large language models can help home robots recover from errors without human help.

Enhancing Business Intelligence with LLMs

In the realm of business intelligence, companies like Fluent are leveraging LLMs to simplify and democratize data access. Fluent’s AI-powered natural language querying platform allows non-technical users to directly query business databases using natural language, eliminating the need for SQL expertise or complex dashboard creation. This innovation has the potential to disrupt the traditional business intelligence market by making data analysis more accessible and efficient.

Robert Van Den Bergh, CEO of Fluent, states, “Consultants move from waiting two weeks for an insight to 30 seconds. That means they ask lots more questions, use data considerably more in their job. Data becomes something that’s now in their reach.”

For more information, visit Fluent’s AI-powered natural language querying platform.

Ethical and Safety Concerns

While LLMs offer numerous benefits, they also pose significant ethical and safety concerns. Researchers at Anthropic have highlighted a vulnerability in current LLM technology where persistent questioning can bypass safety guardrails, leading to the generation of harmful content. This raises concerns about the safety and reliability of LLMs, potentially impacting their adoption and development.

Anthropic’s research emphasizes the need for more advanced safety measures to ensure responsible AI development. The potential for LLMs to exhibit deceptive behavior further underscores the importance of robust safety measures. For more insights, read the article on Anthropic’s findings on AI safety.

Regulatory Challenges and Market Trends

The Indian government has introduced regulations requiring explicit permission before deploying AI/LLMs for users on the Indian internet. This move has sparked concerns about potential hindrances to innovation and growth in the Indian AI/LLM industry, particularly for startups. The regulations could favor large corporations over smaller companies, impacting the competitive landscape.

For more details on this regulatory development, visit Govt missive to seek nod to deploy LLMs to hurt small companies: startups.

Related Articles

- Navigating the Complexities of LLM Development: From Demos to Production

- Rethinking LLM Memorization

- Human Creativity in the Age of LLMs

- 5 Ways to Implement AI into Your Business Now

- The Adaptation Odyssey: Challenges in Fine-Tuning Large Language Models (LLMs)

Looking for Travel Inspiration?

Explore Textify’s AI membership

Need a Chart? Explore the world’s largest Charts database