The Flashy Beginnings of LLM Development

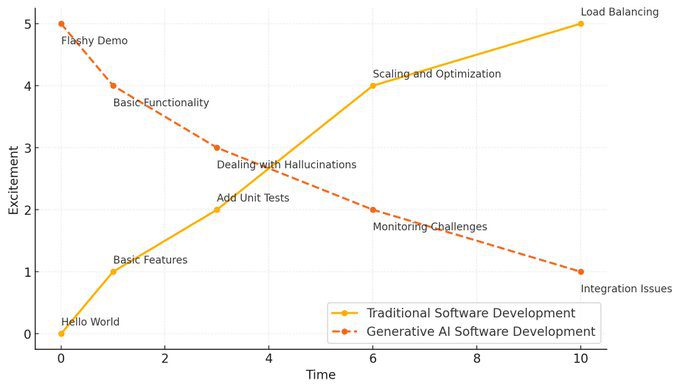

In the realm of Large Language Models (LLMs), initial demonstrations often captivate audiences with their flashy capabilities. However, as highlighted in a recent tweet by @stefkrawczyk, the journey from a proof-of-concept to a fully functional production system can be fraught with challenges. The tweet invites developers to a 30-minute primer on the intricacies of the Software Development Lifecycle (SDLC) for LLM systems, emphasizing the importance of monitoring non-deterministic outputs and moving beyond hallucinations in production.

The Software Development Lifecycle (SDLC) for LLM Systems

Understanding the SDLC for LLM systems is crucial for transitioning from a demo to a production-ready application. This lifecycle involves several stages, including requirement analysis, design, implementation, testing, deployment, and maintenance. Each stage presents unique challenges, particularly when dealing with the non-deterministic nature of LLM outputs. Effective monitoring and testing strategies are essential to ensure that the system performs reliably in real-world scenarios.

Monitoring Non-Deterministic Outputs & Testing

One of the significant hurdles in LLM development is managing non-deterministic outputs. Unlike traditional software systems, LLMs can produce varying results for the same input, making it challenging to ensure consistency and reliability. Monitoring these outputs involves setting up robust logging and alerting mechanisms to detect anomalies and unexpected behaviors. Testing strategies must also evolve to include techniques like adversarial testing and continuous integration to validate the model’s performance under different conditions.

Moving Beyond Hallucinations in Production

Hallucinations, where LLMs generate plausible but incorrect information, are a common issue in production environments. Addressing this requires a combination of techniques, including fine-tuning the model with domain-specific data, implementing post-processing filters, and incorporating human-in-the-loop systems for critical tasks. Companies like Unlikely AI are pioneering approaches to create more trustworthy AI by combining neural network approaches with symbolic AI, aiming to reduce hallucinations and improve accuracy. For more insights on Unlikely AI’s strategy, visit Laredo Labs is another company leveraging AI to automate development work. Their AI-driven platform for code generation uses natural language commands and claims to have one of the most comprehensive software engineering datasets. This enables repository-level task completion and a ‘full stack’ machine learning approach, potentially revolutionizing software development workflows and productivity.

Moving Beyond Hallucinations in Production

Hallucinations, or the generation of incorrect or nonsensical outputs by LLMs, are a significant concern in production environments. Addressing this issue requires a combination of improved model training, better data curation, and advanced validation techniques. Companies like Unlikely AI are developing trustworthy AI platforms that combine neuro-symbolic AI with traditional software methods to tackle issues like bias, hallucination, accuracy, and trust in AI. Their approach aims to create a more reliable and accurate AI system by integrating neural network approaches with symbolic AI.

Breaking Free from Proof-of-Concept Hell

The transition from a proof-of-concept to a production-ready system is often referred to as ‘proof-of-concept hell.’ This phase is characterized by the challenges of scaling, maintaining performance, and ensuring reliability. To break free from this phase, developers must adopt best practices in SDLC, implement rigorous testing and monitoring, and continuously refine their models to reduce errors and improve accuracy.

Related Articles

- 5 Ways to Implement AI into Your Business Now

- The Dell AI Factory: Your Gateway to Confident AI Adoption

- Benefits of Combining Manual Testing with Automation Software

- In LLM development, what starts flashy often ends in purgatory and the challenges that come with it. By understanding the SDLC for LLM systems, monitoring non-deterministic outputs, and addressing hallucinations in production, developers can navigate these complexities and achieve successful deployment of LLM applications.

Related Articles

- 5 Ways to Implement AI into Your Business Now

- The Dell AI Factory: Your Gateway to Confident AI Adoption

- Benefits of Combining Manual Testing with Automation Software

- Dive into the Future of Fully Homomorphic Encryption (FHE) and Artificial Intelligence (AI) with DIN

- Model-Based Design & AI: Accelerate Medical Innovation

Looking for Travel Inspiration?

Explore Textify’s AI membership

Need a Chart? Explore the world’s largest Charts database