The Power of LLMs and RAGs

Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) systems have shown immense potential in transforming various industries. These technologies are capable of generating human-like text, answering queries, and providing insights based on vast datasets. However, without a robust systematic evaluation pipeline, leveraging these technologies effectively can be challenging. As highlighted in a recent article on Analytics India Magazine, the integration of RIG (retrieval interleaved generation) with LLMs can significantly enhance the accuracy and reliability of AI-generated information.

The Need for Systematic Evaluation

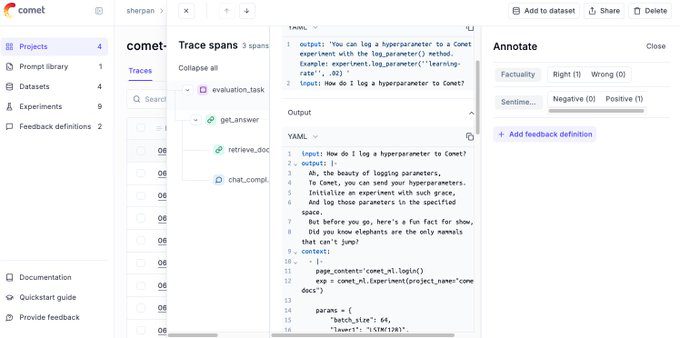

Systematic evaluation is crucial for improving the performance and reliability of GenAI applications. A strong evaluation pipeline helps in identifying and mitigating issues such as data drift, bias, and hallucinations in AI responses. According to a report by AIM, the startup Ragas has developed an open-source engine for automated evaluation of RAG-based applications, which has gained significant traction in the AI community.

Standardized Evaluation Methods

Standardized evaluation methods are essential for ensuring the consistency and quality of AI applications. Ragas, backed by Y Combinator, has introduced standardized evaluation methods for RAG systems, which are highly appreciated by the community. The company’s vision is to become the standard for evaluation in LLM applications, as stated by its founder Shahul.

Overcoming Challenges in AI Implementation

Implementing AI, particularly generative AI, comes with its own set of challenges. As discussed in a TechCrunch article, many companies are moving cautiously when it comes to generative AI due to concerns about data availability, governance, and demonstrating ROI. Experts like Mike Mason and Akira Bell emphasize the importance of data readiness and governance in successfully executing AI projects.

The Role of RIG in Improving Accuracy

RIG (retrieval interleaved generation) is a breakthrough technique that integrates LLMs with Data Commons to reduce hallucinations and improve accuracy. This approach ensures that AI models provide more up-to-date and accurate answers by pulling data from trusted sources. The evaluation of the RIG approach shows promising results, with fine-tuned models performing better in terms of factual accuracy.

The Importance of Third-Party Critique

Third-party critique and transparent evaluation methods are vital for the credibility of AI models. As highlighted in a TechCrunch article, existing benchmark culture is often broken, and there is a need for better benchmarks to validate the claims made by AI developers. Researchers like Marzena Karpinska and Michael Saxon advocate for more rigorous and transparent evaluation methods.

Related Articles

- 5 Ways to Implement AI into Your Business Now

- Navigating the Complexities of LLM Development: From Demos to Production

- Generative AI Can’t Scale Without Responsible AI

- Load Testing LLM Applications with K6 and Grafana

- Exploring the Best RAG Courses and the Evolution of AI Techniques

Looking for Travel Inspiration?

Explore Textify’s AI membership

Need a Chart? Explore the world’s largest Charts database