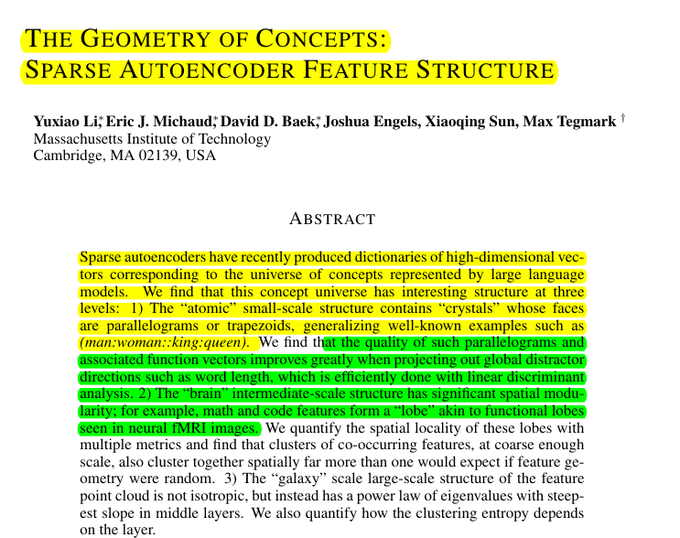

Artificial intelligence has shifted from experimentation to enterprise infrastructure. Today, organizations are deploying scalable AI systems that connect directly to core business workflows. As a result, companies need platforms that are unified, reliable, and easy to manage. Built on Google Cloud Platform, Vertex AI provides a single environment for model development, generative AI, and MLOps […]