To write an excellent your essay, you face many obstacles. Two of them being very prominent; The total number of words is less than the required length or it’s greater than the stated range. It becomes important to reduce the word count without compromising on the quality of the overall essay. The ideal count for an essay is around 650 words.

There are some strategies to make your essay shorter. Like trimming down on the irrelevant words, removing the adverbs and adjectives, shortening of paragraphs that have more than 7 sentences.

However, this process can be stressful when you’re under pressure to meet a deadline. Writing essays that satisfy all of the above strategies cannot be mastered within a short amount of time. Hence, we looked at an alternative to reduce the word count of essays with least effort from your side.

Using AI to reduce the word count of essays

With the advancements in language technology, we can solve this problem with AI. That’s what textify.ai did. The team of engineers build an AI tool that can reduce the count of words using state-of-the-art transformer models.

Text summarization is an application of Natural Language Processing. It is the task of generating a smaller version of the document by capturing all the important points. It is attributed to being a sequence-to-sequence task. It eases up the burden of manually going a pile of text, instead we get to the crucial topics of the document.

Essay text summarization

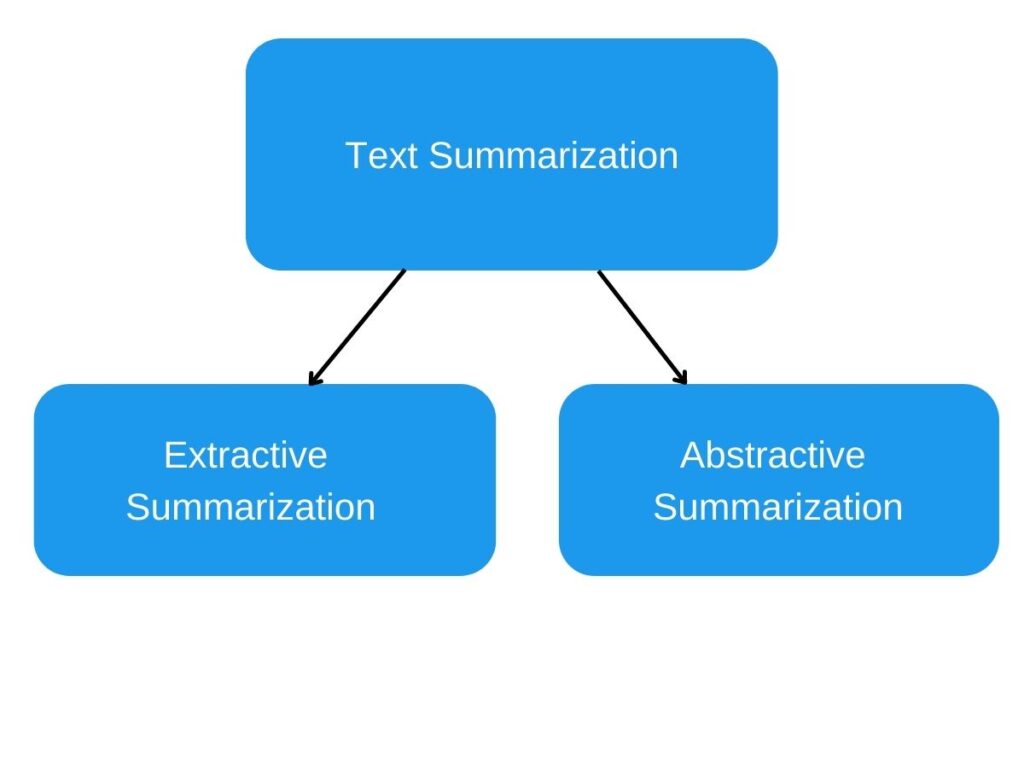

When it comes summarization there are two main approaches to it. Extractive summarization and Abstractive summarization. Although, both sound similar, they are contrasting to each other in the generation of the overall summary of the document.

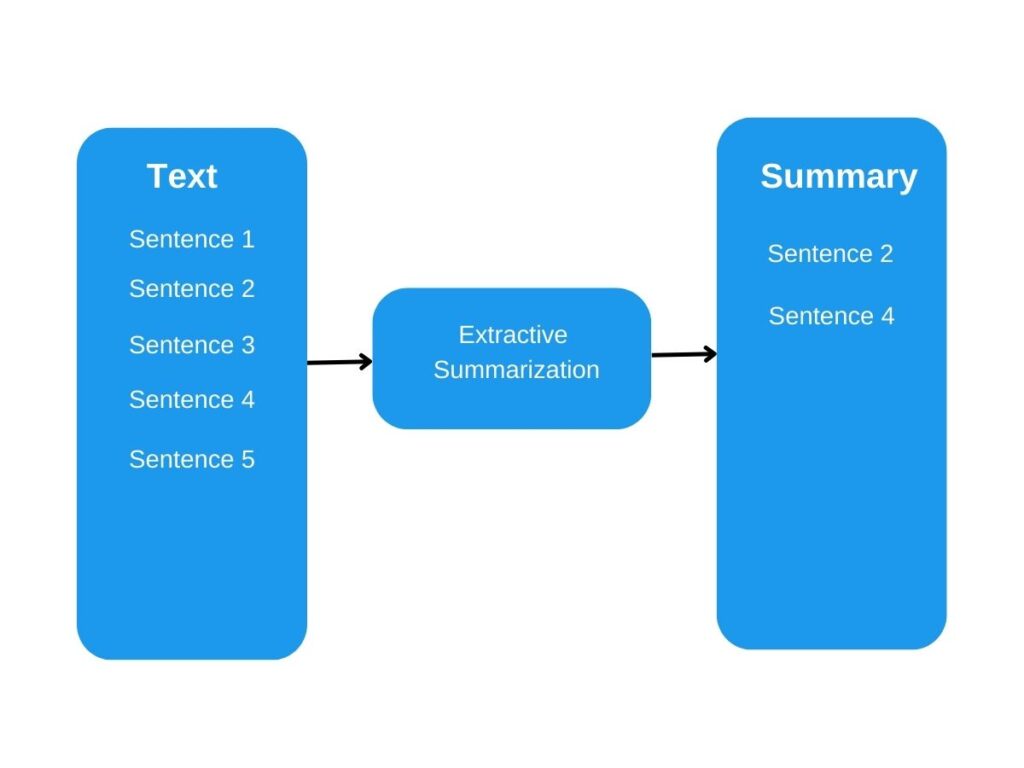

In the Extractive summarizer, only the most important sentences and phrases are taken into account. It simply takes the text, ranks all the sentences according to the understanding and relevance of the text, and presents you with the most important sentences, which becomes the summary of the document.

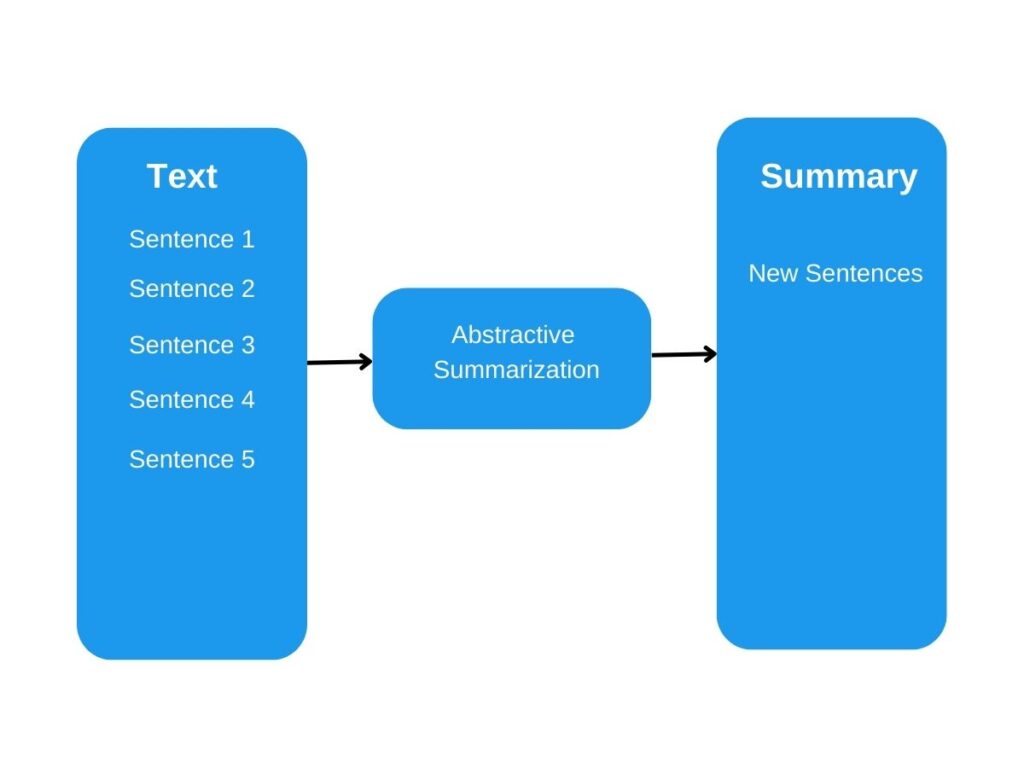

Abstractive summarizer, on the other hand, generates new sentences from the document. It tries to guess the meaning of the whole text and creates words and phrases, and adds the most important facts found in the text. Abstractive summarization techniques are more complex than extractive summarization techniques and are also computationally more expensive.

In order to reduce the count of words for an essay, the abstractive summarizer works well as it preserves the hierarchy of words with the context in which they are used.

We need a encoder-decoder architecture as it’s a sequence-to-sequence task and this architecture can capture the long-term dependencies.

Experimentation and Results

In the base model, when it was tested out with essays the results were undesirable. Ideally for essays, we would like to reduce the word count in the range of 100–300, but the base model was trying to condense all of the information into 50–70 words. Suppose an essay containing 600 words when input to the model produced 50 words. More prominence was given to the words in first and last paragraph. The sentences in the middle were missing from the summary.

Improving the summarizer model to reduce word count of essays

- Chunking the essay

To combat the above problem, the essay was split into bucket of 500 words. Such that each bucket given to the model had equal prominence. We then combine all the summaries from each of the splits to generate meaningful text.

2. Bart model

BART or Bidirectional and Auto-Regressive Transformer is a sequence-to-sequence model trained as a denoising autoencoder. A fine-tuned BART model can take a text sequence as input and produce a different text sequence as an output. This type of model is relevant for our task of text summarization.

Facebook Bart Large CNN

The model used is facebook/bart-large-cnn from huggingface, which has been developed by Facebook. It contains 1024 hidden layers and 406M parameters and has been fine-tuned on CNN daily mail dataset.

Distil Bart 12–6 CNN

In our further trial, we experiment on the Distil Bart base model that has been fine-tuned on the CNN Daily Mail dataset. This a much lighter model as compared to Facebook Bart.

Summaries produced by the models using Auto Model and Auto Tokenizer gave us more detailed summaries in all cases as compared to using the pipeline.

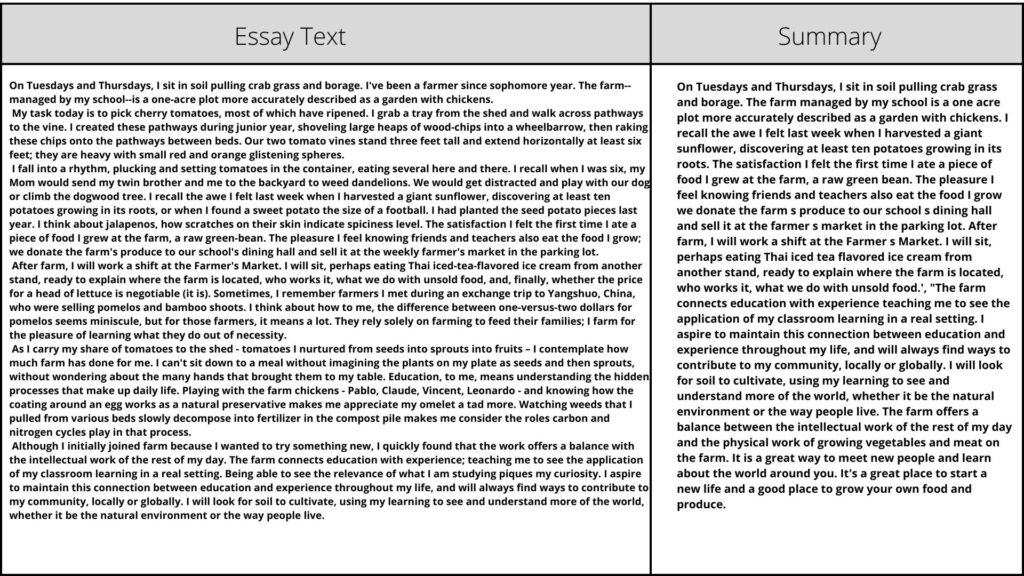

After chunking the essay text and generating the summaries, the facebook/bart-large-cnn model performed well. The context was intact with the overall key points maintained during the comprehension.

Here is one instance for better understanding of the working of the model.

Conclusion

Identifying the ideal Transformers model for the task becomes essential. The Hugging Face library provides access to hundreds of models, but picking the most relevant one is a difficult task in itself. Since our motive was to generate summaries for the essays by reducing the number of words, we started experimenting with Bart models.

In this article, we presented how textify.ai performs essay text summarization in order to reduce the word count of an essay in technical terms. You can head over to the website to try it out!